spfilteR: Semiparametric Spatial Filtering with Eigenvectors in (Generalized) Linear Models

Sebastian Juhl

2025-12-19

Source:vignettes/spfilteR_vignette.Rmd

spfilteR_vignette.RmdIntroduction

The spfilteR package provides tools to implement the

eigenvector-based spatial filtering (ESF) approach put forth by Griffith (2003) in linear and generalized linear

regression models. It allows users to obtain eigenvectors from a

transformed connectivity matrix and supports the supervised and

unsupervised creation of a synthetic spatial filter. For the

unsupervised approach, eigenvectors can be selected based on either i)

the maximization of model fit, ii) the minimization of residual

autocorrelation, iii) the statistical significance of residual

autocorrelation, or iv) the significance level of candidate

eigenvectors. Alternatively, all eigenvectors in the search set may be

included so that no selection takes place. While the functions provide a

high flexibility, only a minimum of code is required to implement the

unsupervised ESF approach.

This vignette solely focuses on the workflow to illustrate the

functionality of the spfilteR package. For a discussion on

the ESF methodology, interested readers may be referred to other

sources, e.g., Griffith, Chun, and Li

(2019) or Tiefelsdorf and Griffith

(2007).

Installation

A stable release version of the spfilteR package is

available on CRAN and the latest development version can be downloaded

from GitHub.

# CRAN release

install.packages("spfilteR")

# GitHub

library(devtools)

devtools::install_github("sjuhl/spfilteR")Getting Started

Together with the main functions, the package contains an artificial

dataset (fakedataset) and a connectivity matrix

(W) connecting the 100 cross-sections on a regular grid by

using the rook criterion of adjacency. For illustrative purposes, the

following examples utilize the fake dataset.

# load the package

library(spfilteR)

# load the dataset

data("fakedata")

# take a look at the connectivity matrix and the variables

dim(W)

#> [1] 100 100

head(fakedataset)

#> ID indicator count x1 x2 x3 negative negcount

#> 1 1 0 0 11.29514 5.527426 6.6406409 -2.338103 9

#> 2 2 0 4 13.92705 7.047870 4.0049350 -2.048737 3

#> 3 3 1 1 17.31431 10.525834 -0.7022595 -1.868379 5

#> 4 4 1 4 15.21778 7.817901 1.5948141 -2.737914 2

#> 5 5 1 3 15.56298 7.903947 -1.2708973 -1.117427 2

#> 6 6 1 2 17.43941 11.254842 -1.4158475 1.577442 2Besides an ID variable, the dataset contains a binary

indicator variable, two count variables, and four

continuous variables.

Identifying Spatial Autocorrelation

In a first step, the function MI.vec() checks for the

presence of spatial autocorrelation in the continuous variables by means

of Moran’s I (see also Cliff and Ord

1981, 1972). The function further allows users to define the

alternative hypothesis in order to obtain p-values.

# select continuous variables

cont <- cbind(fakedataset$x1, fakedataset$x2, fakedataset$x3, fakedataset$negative)

colnames(cont) <- c("x1", "x2", "x3", "negative")

# Moran test of spatial autocorrelation

(I <- MI.vec(x = cont, W = W, alternative = 'greater'))

#> I EI VarI zI pI

#> x1 0.311178214 -0.01010101 0.005344193 4.3948248 5.543107e-06 ***

#> x2 0.168757531 -0.01010101 0.005344193 2.4466317 7.209904e-03 **

#> x3 0.009112739 -0.01010101 0.005344193 0.2628276 3.963417e-01

#> negative -0.244064424 -0.01010101 0.005344193 -3.2004192 9.993139e-01The output suggests that the variables x1 and

x2 are positively autocorrelated at conventional levels of

statistical significance. Moreover, the standardized value of Moran’s

I (zI) indicates that the variable

negative is negatively autocorrelated. We can use the

function MI.vec() and specify

alternative = 'lower' to assess the significance of the

negative autocorrelation:

MI.vec(x = fakedataset$negative, W = W, alternative = 'lower')

#> I EI VarI zI pI

#> 1 -0.2440644 -0.01010101 0.005344193 -3.200419 0.0006861391 ***Since the Moran coefficient is a global measure of spatial

autocorrelation, spatial heterogeneity constitutes a problem for this

statistic. More specifically, the simultaneous presence of positive and

negative spatial autocorrelation at different scales cannot be revealed

by the classical Moran’s I. To circumvent this problem, the

function MI.decomp() decomposes the Moran coefficient into

a positively and a negatively autocorrelated part and performs a

permutation procedure to assess the significance (Dray 2011):

# decompose Moran's I

(I.dec <- MI.decomp(x = cont, W = W, nsim=100))

#> I+ VarI+ pI+ I- VarI- pI-

#> x1 0.3894789 0.001510352 0.00990099 ** -0.0783007 0.001816847 1.00000000

#> x2 0.3048369 0.001983860 0.01980198 * -0.1360794 0.001601069 0.98019802

#> x3 0.2247566 0.001641282 0.32673267 -0.2156438 0.002056313 0.39603960

#> negative 0.1131614 0.001924842 0.99009901 -0.3572259 0.001901218 0.00990099

#> pItwo.sided

#> x1 0.01980198 *

#> x2 0.03960396 *

#> x3 0.65346535

#> negative ** 0.01980198 *Note that the global Moran’s I is the sum of I+

and I-:

# I = 'I+' + 'I-'

cbind(I[, "I"], I.dec[, "I+"] + I.dec[, "I-"])

#> [,1] [,2]

#> [1,] 0.311178214 0.311178214

#> [2,] 0.168757531 0.168757531

#> [3,] 0.009112739 0.009112739

#> [4,] -0.244064424 -0.244064424Unsupervised Spatial Filtering in Linear Models

Assume that we wish to regress x1 on x2 and

test for residual autocorrelation using the function

MI.resid().

# define variables

y <- fakedataset$x1

X <- cbind(1, fakedataset$x2)

# OLS regression

ols.resid <- resid(lm(y ~ X))

# Moran test of residual spatial autocorrelation

MI.resid(resid = ols.resid, W = W, alternative = 'greater')

#> I EI VarI zI pI

#> 1 0.350568 -0.01010101 0.005344193 4.933643 4.035495e-07 ***The results show that the residuals are significantly autocorrelated

which violates the assumption of uncorrelated errors. In order to

resolve this problem of spatial autocorrelation in regression residuals,

the function lmFilter() estimates a spatially filtered

linear regression model using an unsupervised stepwise regression (or a

lasso-based selection) to identify relevant eigenvectors derived from

the transformed connectivity matrix. Below, the unsupervised eigenvector

search algorithm selects eigenvectors based on the reduction in residual

autocorrelation. The output is a class spfilter object.

# ESF model

(lm.esf <- lmFilter(y = y, x = X, W = W, objfn = 'MI', positive = TRUE,

ideal.setsize = TRUE, tol = .2))

#> 9 out of 22 candidate eigenvectors selected

summary(lm.esf, EV = TRUE)

#>

#> - Spatial Filtering with Eigenvectors (Linear Model) -

#>

#> Coefficients (OLS):

#> Estimate SE p-value

#> (Intercept) 8.979538 0.67359052 6.321380e-23 ***

#> beta_1 1.023918 0.08095813 1.379178e-21 ***

#>

#> Adjusted R-squared:

#> Initial Filtered

#> 0.4673945 0.7271686

#>

#> Information Criteria:

#> AIC AICc BIC

#> Initial 425.4662 425.5899 430.6766

#> Filtered 366.9404 369.9404 395.5973

#>

#> Filtered for positive spatial autocorrelation

#> 9 out of 22 candidate eigenvectors selected

#> Objective Function: "MI"

#>

#> Summary of selected eigenvectors:

#> Estimate SE p-value partialR2 VIF MI

#> ev_13 -9.623056 1.443934 2.147786e-09 0.23208263 1.005781 0.6302019 ***

#> ev_4 -3.117054 1.478348 3.780050e-02 0.02873360 1.049729 0.9257835 *

#> ev_10 -5.465326 1.450142 2.944403e-04 0.07901543 1.013360 0.7303271 ***

#> ev_2 4.799492 1.440055 1.253732e-03 0.05886102 1.001118 1.0004147 **

#> ev_9 3.577483 1.466525 1.669440e-02 0.03071488 1.034085 0.7638378 *

#> ev_21 4.082455 1.445443 5.846347e-03 0.04093631 1.007610 0.4539879 **

#> ev_5 -2.924657 1.448955 4.655373e-02 0.02055372 1.011899 0.8968632 *

#> ev_19 3.789599 1.439787 1.000406e-02 0.03671349 1.000798 0.4615722 *

#> ev_14 2.222939 1.457704 1.308151e-01 0.01140690 1.022793 0.6121155

#>

#> Moran's I ( Residuals):

#> Observed Expected Variance z p-value

#> Initial 0.35056797 -0.01192610 0.01207299 3.2990854 0.0004850019 ***

#> Filtered -0.05084639 -0.08474192 0.05080919 0.1503735 0.4402349689While the print method shows that 9 eigenvectors were

selected from the candidate set consisting of 22 eigenvectors, the

summary method provides additional information. Besides the

ordinary least squares (OLS) parameter estimates, the output shows that

the ESF model filters for positive spatial autocorrelation using the

minimization of residual autocorrelation as objective function during

the eigenvector search. A comparison between the filtered and the

nonspatial OLS model with respect to model fit and residual

autocorrelation is also provided. Since the option EV is

set to TRUE, the summary method also displays

information on the selected eigenvectors. As the results show, the ESF

model successfully removes the spatial pattern from model residuals.

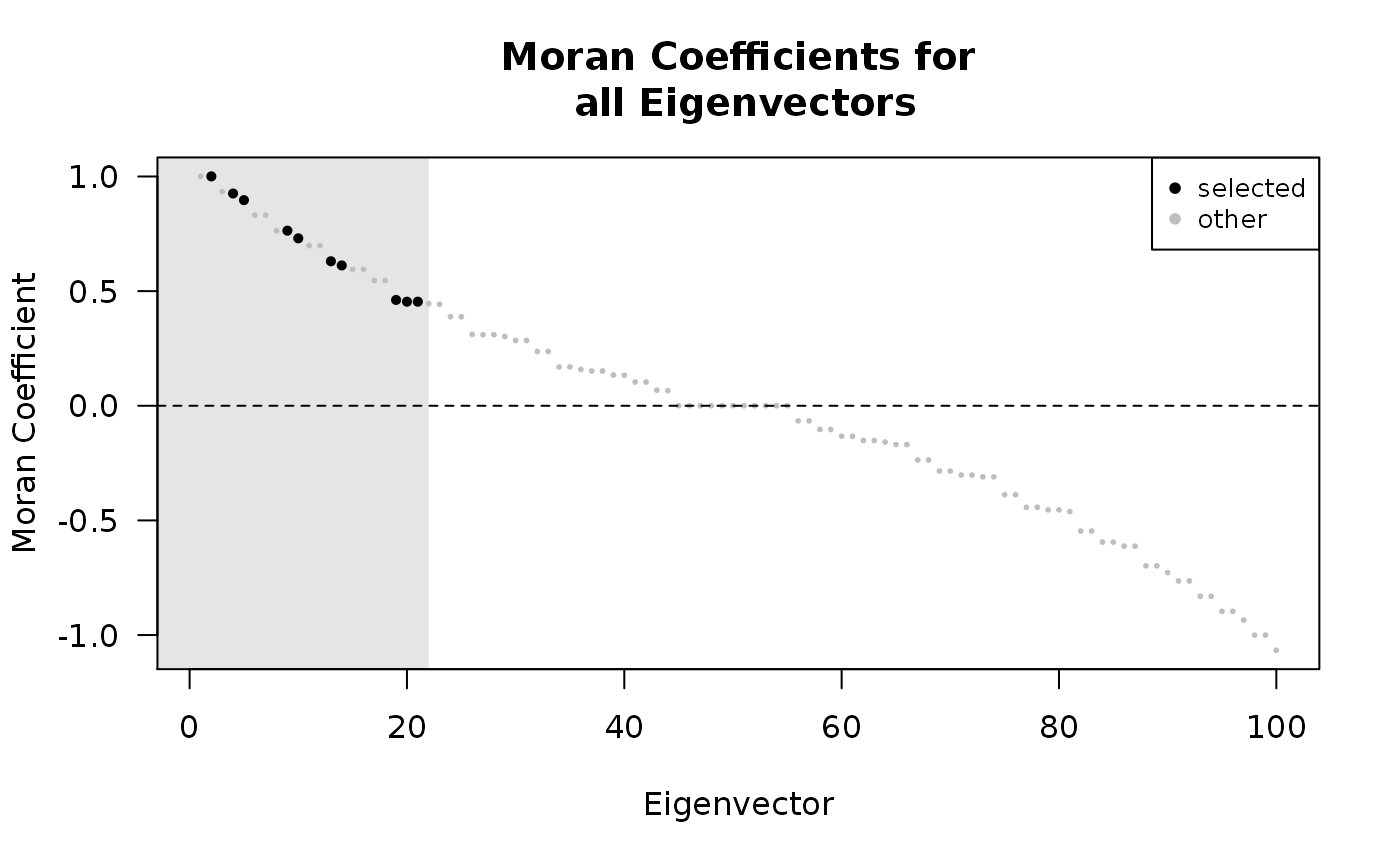

The plot method allows for an easy visualization of the

results. The graph displays the Moran coefficient associated with each

of the eigenvectors. The shaded area signifies the candidate set and

selected eigenvectors are illustrated by black dots.

plot(lm.esf)

Moreover, lmFilter() also allows users to select

eigenvectors based on alternative selection criteria:

### Alternative selection criteria:

# maximization of model fit

lmFilter(y = y, x = X, W = W, objfn = 'R2', positive = TRUE, ideal.setsize = TRUE)

#> 11 out of 22 candidate eigenvectors selected

lmFilter(y = y, x = X, W = W, objfn = 'AIC', positive = TRUE, ideal.setsize = TRUE)

#> 10 out of 22 candidate eigenvectors selected

lmFilter(y = y, x = X, W = W, objfn = 'AICc', positive = TRUE, ideal.setsize = TRUE)

#> 9 out of 22 candidate eigenvectors selected

lmFilter(y = y, x = X, W = W, objfn = 'BIC', positive = TRUE, ideal.setsize = TRUE)

#> 7 out of 22 candidate eigenvectors selected

# significance of residual autocorrelation

lmFilter(y = y, x = X, W = W, objfn = 'pMI', sig = .1, bonferroni = FALSE,

positive = TRUE, ideal.setsize = TRUE)

#> 3 out of 22 candidate eigenvectors selected

# significance of eigenvectors

lmFilter(y = y, x = X, W = W, objfn = 'p', sig = .1, bonferroni = TRUE,

positive = TRUE, ideal.setsize = TRUE)

#> 3 out of 22 candidate eigenvectors selected

# all eigenvectors in the candidate set

lmFilter(y = y, x = X, W = W, objfn = 'all', positive = TRUE, ideal.setsize = TRUE)

#> 22 out of 22 candidate eigenvectors selected

# Moran's I lasso

lmFilter(y = y, x = X, W = W, objfn = 'MI-lasso', positive = TRUE, conditional.se = TRUE)

#> 31 out of 31 candidate eigenvectors selectedIf users wish to select eigenvectors based on individual selection

criteria, they can obtain the eigenvectors using the function

getEVs() and perform a supervised selection procedure using

the basic stats::lm()

command.

Extension to Generalized Linear Models

The ESF approach outlined above easily extends to generalized linear

models (GLMs) as well (see also Griffith, Chun,

and Li 2019). Therefore, the spfilteR package

contains the function glmFilter() which uses maximum

likelihood estimation (MLE) to fit a spatially filtered GLM and performs

an unsupervised eigenvector search based on alternative objective

functions.

Except for minor differences, glmFilter() works similar

to the lmFilter() function discussed above. The option

model defines the model that needs to be estimated.

Currently, unsupervised spatial filtering is possible for logit, probit,

poisson, and negative binomial models. Moreover, the option

optim.method specifies the optimization method used to

maximize the log-likelihood function. Finally, resid.type

allows users to define the type of residuals used to calculate Moran’s

I and boot.MI is an integer specifying the number

of bootstrap permutations to obtain the variance of Moran’s

I.

# define outcome variables

y.bin <- fakedataset$indicator

y.count <- fakedataset$count

# ESF logit model

(logit.esf <- glmFilter(y = y.bin, x = NULL, W = W, objfn = 'p', model = 'logit',

optim.method = 'BFGS', sig = .1, bonferroni = FALSE,

positive = TRUE, ideal.setsize = FALSE, alpha = .25,

resid.type = 'deviance', boot.MI = 100))

#> 6 out of 31 candidate eigenvectors selected

# ESF probit model

(probit.esf <- glmFilter(y = y.bin, x = NULL, W = W, objfn = 'p', model = 'probit',

optim.method = 'BFGS', sig = .1, bonferroni = FALSE,

positive = TRUE, ideal.setsize = FALSE, alpha = .25,

resid.type = 'deviance', boot.MI = 100))

#> 6 out of 31 candidate eigenvectors selected

# ESF poisson model

(poisson.esf <- glmFilter(y = y.count, x = NULL, W = W, objfn = 'AICc', model = 'poisson',

optim.method = 'BFGS', positive = TRUE, ideal.setsize = FALSE,

alpha = .25, resid.type = 'deviance', boot.MI = 100))

#> 9 out of 31 candidate eigenvectors selected

# ESF negative binomial model

(nb.esf <- glmFilter(y = y.count, x = NULL, W = W, objfn = 'AICc', model = 'nb',

optim.method = 'BFGS', positive = TRUE, ideal.setsize = FALSE,

alpha = .25, resid.type = 'deviance', boot.MI = 100))

#> 0 out of 31 candidate eigenvectors selectedAgain, if users wish to define individual selection criteria or fit a

GLM currently not implemented in glmFilter(), they can

obtain the eigenvectors using the getEVs() command and

perform supervised eigenvector selection using the standard stats::glm()

function.